Lately, I’ve been diving into the intersection of three fields: machine learning, behavioral science, and healthcare. What I’m curious about is how we might transform healthcare data (electronic health records, clinical notes, medical images) into insights and interventions that could improve how medicine is practiced. What software tools can we build to extend physicians’ decision-making capabilities?

In a 2007 New Yorker article, Atul Gawande articulated the question as, “expertise is the mantra of modern medicine, but what do you do when expertise is not enough?” In other words, how do we handle human fallibility? In a 2011 New Yorker article, he observed that while nearly all the world’s top athletes and musicians have coaches, doctors rarely have coaches or mentors beyond their residencies or fellowships. In life, there’s always room for improvement. The coach comes in to offer an external perspective. Likewise, that’s where introspection and self-reflection come in. With data science and statistics, we can play back past episodes to identify trends and anomalies and potentially improve future decision-making. For example, knowledge of medical outcome likelihoods, insights into where intuition conflicts with reality, data on where clinical practice goes awry, personalized statistics on performance, and relevant reminders of the latest medical research may all change how physicians make decisions. In fact, these kinds interventions already have changed medical decision-making.

Sometimes there’s a simple solution ¶

Details matter, and they’re easy to accidentally forget even for experts. Checklists can dramatically improve medical outcomes. In 2006, Peter Pronovost and coauthors published a study showing that a checklist (and properly incentivized enforcement of said checklist) decreased Catheter-Related Blood Stream Infections (CR-BSI) from 0.27% to 0% in first three months. The checklist for CR-BSI looked like this:

- CR-BSIs are associated with increased morbidity, mortality and costs of care.

- CR-BSIs are a preventable complication that causes as many as 11 deaths every day in the U.S.

- The following interventions decrease the risk for CR-BSIs:

- Appropriate hand hygiene,

- Use of chlorhexidine for skin preparation

- Use of full-barrier precautions during central venous catheter insertion,

- Subclavian vein placement as the preferred site, and

- Removing unnecessary central venous catheters.

For physicians and nurses, this informational intervention wasn’t novel (nor controversial) yet it saved ~1,500 patients’ lives and reduced medical costs by ~175 million dollars within the first 18 months that it was implemented. That’s one example of how to address the cognitive blind spot of medical professionals.

Medical tests require statistical literacy ¶

Statistics are tricky. A misunderstanding of statistics can lead to bad decision making. For a simple example, consider the difference between absolute risk and relative risk. If a side-effect from a drug is rare, say 1 in 10,000, and the side-effect of a new drug is 2 in 10,000, then we could either say (1) the new drug increased the relative risk by 100 percent or (2) the new drug increased the absolute risk by 0.01 percentage points. The two statements feel very different, but they mean the same thing.

In 2007, Gerd Gigerenzer and coauthors published a study revealing that few gynecologists understand the likelihood a patient with a positive mammogram has breast cancer. They asked the following question:

Assume you conduct breast cancer screening using mammography in a certain region. You know the following information about the women in this region:

- The probability that a woman has breast cancer is 1% (prevalence)

- If a woman has breast cancer, the probability that she tests positive is 90% (sensitivity)

- If a woman does not have breast cancer, the probability that she nevertheless tests positive is 9% (false positive rate)

A woman tests positive. She wants to know from you whether that means that she has breast cancer for sure, or what the chances are. What is the best answer?

- The probability that she has breast cancer is about 81%.

- Out of 10 women with a positive mammogram, about 9 have breast cancer.

- Out of 10 women with a positive mammogram, about 1 has breast cancer.

- The probability that she has breast cancer is about 1%.

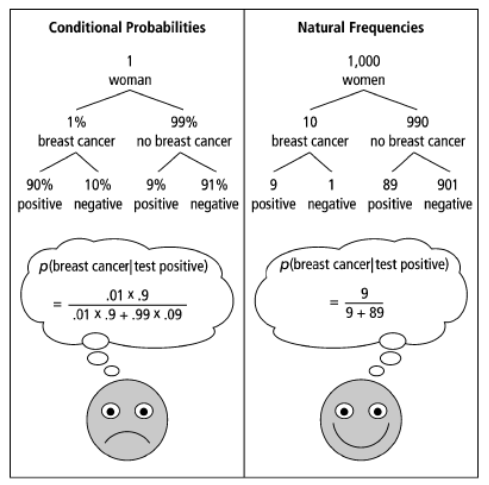

What’s tricky about this question is handling conditional probabilities rather than natural frequencies. Conditional probabilities require understanding Bayes’ Rule whereas natural frequencies simply require addition and division, which most people feel quite comfortable with.

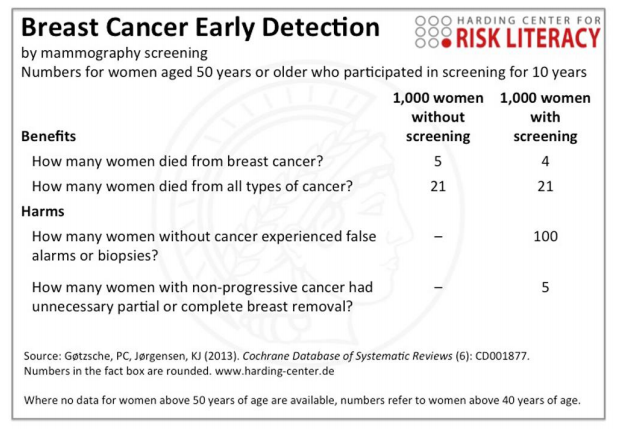

By pointing out instances where our intuition conflicts with the stats, we can specifically address these cognitive blind spots and better understand their general shape. The breast cancer statistics above are very close to the actual statistics. Depending on your perspective, screening for early detection of breast cancer may lead to more harm than good. Furthermore, it’s possible (and often the case) that early screening increases survival rates only by extending the start data of the survival rate rather than decreasing the mortality rate.

Clinical practices can go awry ¶

Like all humans, doctors make mistakes too. In 2014, Choosing Wisely compiled lists of tests, treatments, disposition decisions that physicians should not do because they are ineffective or cost more than other options but offer similar effectiveness. The lists on Choosing Wisely are developed by each medical society separately, so there’s one from American College of Cardiology, American Association of Neurological Surgeons, etc. Peer-reviewed research in medicine seems to suggest Choosing Wisely works. 2014 seems to be the year of the lists, and researchers in emergency medicine compiled a list of 64 clinical actions of little value and identified a top 5 list of what not to do. Here’s an example of the top 5 list for emergency medicine:

- Do not order computed tomography (CT) of the cervical spine for patients after trauma who do not meet the National Emergency X-ray Utilization Study (NEXUS) low-risk criteria or the Canadian C-Spine Rule.

- Do not order CT to diagnose pulmonary embolism without first risk stratifying for pulmonary embolism (pretest probability and D-dimer tests if low probability).

- Do not order magnetic resonance imaging of the lumbar spine for patients with lower back pain without high-risk features.

- Do not order CT of the head for patients with mild traumatic head injury who do not meet New Orleans Criteria or Canadian CT Head Rule.

- Do not order coagulation studies for patients without hemorrhage or suspected coagulopathy (eg. with anticoagulation therapy, clinical coagulopathy).

What’s tricky about these recommendations is how we measure risk. For example, the pretest probability for pulmonary embolisms (PE) is usually calculated by the Wells’ Score, which is determined in part by whether a physician suspects a PE. The Wells’ Score is often criticized for this circular reasoning. It is easy to imagine a more data-driven approach using electronic health records. Ultimately, the goal of this pretest probability test is to make best decision possible. A low pretest probability means that a physician can order a D-Dimer lab and if the results of the lab are negative, the physician can rule out PE as a diagnosis without needing to do a CT scan of the lungs. The ability to rule out PE with a lab test can reduce costs for patients, exposure to unnecessary radiation, and wait times for patients to see physicians. Statistics on how often negative D-Dimer labs lead to lung CT scans checking for PE, how often lung CT scans checking for PE happen without D-Dimer labs, how often patients have PE under these conditions, and how often physicians miss a PE diagnosis could help updating these risk assessments. Likewise, a data driven approach could enable tests to handle a higher degree of complexity and continued evaluations on not just efficacy but effectiveness.

Last month, researchers published a comprehensive review of randomized clinical trials from three medical journals revealing 396 medical reversals. 33% of these reversals were related to medications, 20% were procedures, 4% involved either screening tests or treatment algorithms, and the other 43% was other stuff. The New York Times recapped this recap with a couple of the highlights:

- Torn knee meniscus? Try physical therapy first, surgery later.

- If a pregnant woman’s water breaks prematurely, the baby does not have to be delivered immediately.

- To treat emergency room patients in acute pain, a single dose of oral opioids is no better than drugs like aspirin and ibuprofen.

Standards of care change as we learn more. Naturally, physicians need to adapt. Back in 2000, it took 17 years on average for evidence-based findings to reach the majority of clinical practices. It’s probably way faster now, but it would be useful if medical research reversals and clinical guidelines could be integrated into clinical software to keep physicians informed of what’s relevant to them.

Cognitive biases can be found throughout medical practice ¶

Blind spots come in a variety of shapes and sizes. In 2018, Jonathon Howard, a neurologist and psychiatrist at NYU and Bellvue Hospital, published a book titled, Cognitive Errors and Diagnostic Mistakes: A Case-Based Guide to Critical Thinking in Medicine. He illustrates examples in medicine of the ambiguity effect, bandwagon effect and authority bias, confirmation bias, motivated cognition, the backfire effect, curse of knowledge, decision fatigue, feedback sanction, financial bias, forer effect, framing effect and loss aversion, affective error, hot hand fallacy, survival bias, hindsight bias, illusionary correlation, in-group favoritism, information bias, nosology trap, omission bias, overchoice and decision avoidance, overconfidence bias, patient satisfaction error, anchoring bias, occam’s error, availability heuristic, yin-yang error, diagnosis momentum, triage cueing, failure-to-close error, representativeness bias, screening errors, selection bias, galileo fallacy, alarm fatigue, defensive medicine, graded clinician error, and electronic medical record error. One way to improve healthcare is by systemically addressing these biases; first, identify where they happen and then design interventions with decision-support technology to handle these biases.

The space in between computer vision, intelligent electronic health records, smart hospitals, and personalized medicine ¶

There’s already amazing progress within computer vision to handle medical imagery and machine learning to extend what we can for with electronic health records (EHR). Google’s deep-learning AI for detecting metastatic breast cancer achieved similar performance to pathologists. Deep learning models outperform radiologists on detecting lung cancer from low-dose chest CT scans. Convolutional neural networks have been shown to identify the most common cancers and the deadliest skin cancer at a level of competence comparable to dermatologists. Deep learning models can predict cardiovascular risk factors from retinal images. There are machine learning models to predict medical events and readmission rates from EHR data and machine learning models to parse breast pathology reports. We do have to be wary about potential adversarial attacks on medical images, and we can train models to predict when we should ask for medical second opinions. How can this new research and technology be incorporated into how healthcare is delivered in the future?

The future of medicine looks like treatments personalized to each patient with a healthcare system where clinical evidence informs medical procedures and technology extends medical professionals ability to practice medicine and provide care. The next generation of electronic health records should have built-in machine learning and artificial intelligence to offer clinical decision support, personalized treatment suggestions, and data-driven risk assessments of potential treatment outcomes. This is tricky because it’s a medical, technical, social, and political problem, and one of the biggest barriers to change is the incentive system that drives healthcare to focus on dollars instead of health.

A recent post on Less Wrong covers a variety of opportunities in developing personalized medicine from (1) life style optimization, (2) genetics-based personalization (3) preventing medical error (4) AI diagnoses (5) connecting patients with experimental therapies (6) evidence-based medicine into practice (7) N=1 translational medicine experimentation. I see a large overlap in preventing medical error, AI diagnoses, and translating evidence-based medicine into practice-they all fall under the umbrella of enhancing awareness and decision-making capabilities. One domain potentially amenable to an intervention is clinical pathways.

Clinical pathways are the clinical decisions that physicians make to handle a particular patient’s case. This includes assessing the patient, ordering labs and imaging, initiating treatments, prescribing medications, discharging the patient, and re-admitting the patient. In their ideal form, clinical pathways (also known as care pathways, integrated care pathways, critical pathways, or care maps) are decision trees intended to reduce variability in clinical care and promote the practice of evidence-based medicine. For example, here are clinical pathways when a physician suspects pulmonary embolism, chronic kidney disease, hypertension, or nausea and vomiting of pregnancy. While these examples of clinical pathways are intended to be standards of care, the construction, design, and display of these pathways are not standardized. A first order question is whether we can transform EHR data into the component parts of clinical pathways? If we identify clinical pathway units and components in EHR data, then we could study outcomes, analyze the effectiveness of standard of care clinical pathways and compare it to other pathways, and automatically discover high effectiveness clinical pathways for subgroups. With access to picture archiving and communication system (PACS) for implementing models on medical imagery and access to clinical notes, we can augment EHR clinical pathways with time-stamped results and more context.

Clinical pathways contextualize the clinical care process, and it naturally encodes more information than any single action or decision within the process. If physicians could reflect on empirical clinical pathways and their related performance, then physicians could either conform to the standards of care or show where standards of care need to be changed. Incorporating clinical pathways into an intelligent EHR to provide physicians with decision support tools should improve both how medicine is practiced, open new opportunities for healthcare research, and alert physicians to medical reversals and new research on the most effective treatment pathways.